Setting Share Values - CPU Allocation for Virtual Machines

Given an LPAR's resource entitlement, the next step is to ensure a virtual machine has sufficient share of those resources by assigning either a relative share or an absolute share.

Helpful Commands:

Query Dispatching Priority:

Q SHARE userid

Set Dispatching Priority - full command:

SET SHARE userid {ABS|REL} share {LIMITHARD|LIMITSOFT} {ABS|REL} maxshare

Set the share for a service machine (ie TCPIP): SET SHARE userid ABSOLUTE nnn

If looping users impact system performance in your installation, many installations have tested and used the following to ensure a looping user does not impact other users: SET SHARE userid REL 100 LIMITSOFT ABS 3

REL/ABS/Limithard/Limitsoft:

- ABSOLUTE SHARE is a percent of an LPAR.

- RELATIVE SHARE is comparable to an LPAR "weight".

- LIMITHARD caps resource consumption regardless of other user demands.

- LIMITSOFT caps resource consumption unless all users have received their target minimum, and there are no unlimited users who can consume resources.

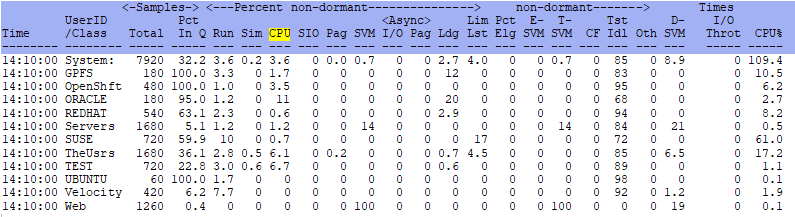

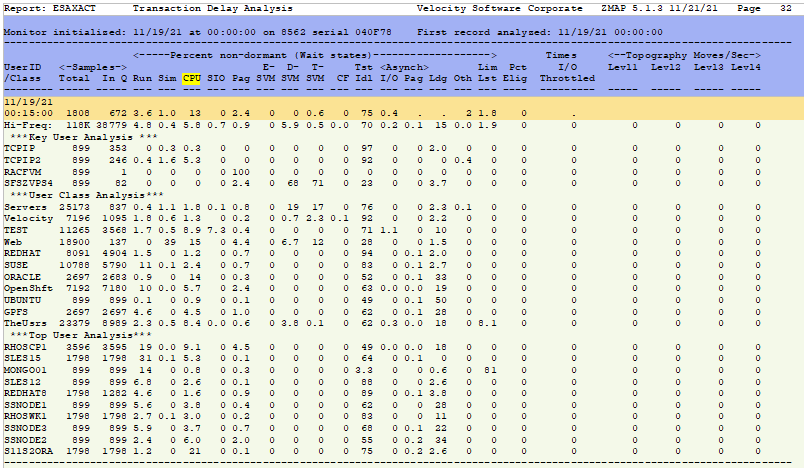

Helpful ESAMON screens/ESAMAP reports:

- ESAUSRC - User Configuration - shows current SHARE settings for users

- ESASRVC - Server Configuration - shows current SHARE settings for users defined as key users

- ESASUM - System Summary - SRMRELDL and SRMABSDL rows - shows ABS vs REL calculations

- ESAXACT - Transaction Analysis - shows CPU wait times (see examples below)

- ESATUNE - System Analysis and Tune Report (if ESATUNE is installed) - shows recommendations

For more information about setting SHARES and the dispatch list - see Scheduler and Dispatcher

Basic information:

CPU is prioritized based on shares assigned to users. Absolute shares and relative shares are both normalized by the scheduler to determine when a user should be dispatched - based on how many other users are also currently requesting service. The scheduler does not track resource user - it only projects the rate at which users should be dispatched. The rate at which users are dispatched is based on dispatch time slice multiplied by a factor. If a user's normalized share is one thousandth of the system, then the factor would be 1000. There is then an I/O bias and page bias applied to move this up or down depending on how much paging or i/o is being requested by the users. The result is all users are assigned a time of day by which they should be dispatched to obtain their share. Poorly tuned systems provide their user's with a dispatching deadline of several seconds or even minutes into the future. By doing this, these installations are telling the scheduler that response time of several seconds or minutes is the requirement...

Large Relative Shares:

Setting shares results in the CP scheduler setting user dispatch priorities. A scheduling algorithm is used that compares each user to other users and gives each user a dispatch priority that should guarantee the user is given an amount of CPU. For example, a user that should get 20% of the CPU should get one time slice out of 5. A user that should get 1% of the the CPU should get one time slice out of 100. If the time slice is set to 5 ms then 1% allocation should result in 5ms of CPU out of the next 500ms of time. On a system with multiple processors, the target would be divided by the number of processors. So a user allocated one percent would get one time slice out of 50 if there were two engines.

Recently some have thought the scheduler to be broken. However when one understands how the scheduler implements installation policy as set by user shares, it becomes more clear. Installations that use the IBM recommendation of setting large relative shares ("SET SHARE TCPIP REL 3000") are telling the scheduler that TCPIP should get 30 times as much CPU as a default Linux server. With some other large shares for virtual machines such as RACF or others, many installations allocate a very high percent of the CPU resources to service machines. This results in the scheduler attempting to schedule the real users to ensure they share the remaining small percent.

If there were 40 users inqueue at RELATIVE 100 each and TCPIP and RACF were both inqueue, then TCPIP and RACF would be allocated by default 60% of the CPU resources and the users share the remaining 40 percent (40 * 100 = 4000, as compared to 3000 + 3000). Then the intent of the scheduler is each user is guaranteed one time slice out of 100 and TCPIP/RACF both are guaranteed 30 time slices out of 100 each. This is the impact of using high relative shares for virtual servers which is not a good idea.

One of the first defaults to change is share settings for the IBM default servers to ABSOLUTE share settings or it can allow looping users to take over.

With a time slice of 5ms, the scheduler will give 5ms of cpu out of the next second to each user. This means that you have just told the scheduler that each user has a deadline time of 1 second - making it difficult to make subsecond response time. But that is what you have told the scheduler. In actuality, because the service machines don't use their allocation, the users will be dispatched far before their deadline. IBM has since changed their recommendation to using smaller relative shares or appropriate absolute shares, though you may still find different recommendations in the IBM documentation. Imagine if VTAM has a relative share of 10000 and only one other user with a relative share of 100 exists on the system. The user's normalize share is approximately 1% of the system. So on this one user system, you have committed one time slice out of 100 to the user. With a time slice of 5ms, then this would set 500 ms as the target by when the user should be dispatched. If a second user logged on, this would not change much.

Relative vs Absolute Shares:

There is one other consideration when deciding if you should use relative or absolute shares. Absolute shares go up as workloads increase, relative shares go down as workloads increase. As load grows, do you want a large service machine's share (such as VTAM) to go up or down? As more users logon to the system, you would probably want VTAM's share to go up to manage the additional load. If you use relative shares, VTAM's normalized share will go down as users logon. So you may find that you artificially degrade response time as more users use the system - just because VTAM's share has been degraded. If you use absolute shares and set the share to the peak requirement at the peak hour, VTAM will always get the correct share.

The default share of a virtual machine is Relative 100. Shares are converted to a current "normalized" value at every dispatch. Thus a relative share of 100 means one thing at one point in time but based on other work and other servers, something else at the next point of time. The default would put all virtual machines equal in the share of CPU resource. Setting a virtual machine at 200 would by design double its access to system resources.

The general rule for deciding to use relative share or absolute share is the requirement for the server. If there is an absolute requirement of 20% of the CPU resource of the LPAR, then SET SHARE ABSOLUTE 20% is appropriate. If the share of a server just needs to be higher then increase the absolute share accordingly. In todays systems with multiple LPARs, it is normally a business decision to allocate resources to specific workloads. There are multiple layers of resource allocation and all layers will require analysis and understanding. The next step for allocating CPU inside the z/VM LPAR is setting shares.

Setting LPAR Weights to meet entitlement objectives:

The SET SHARE command provides a user with either an absolute share (of the system processor resources) or a relative share (relative to all other users). Setting a low user share will cause a user to wait for others on the dispatch list. Setting too many users with high shares will result in their waiting for each other. Care is needed when setting very large shares for several users because of this.

Shares are used in determining deadline priority by adding all the shares of inqueue users and then prioritizing them accordingly. Absolute shares and relative shares of current inqueue users are used to determine a user's "normalized" share. Absolute shares are added first and then subtracted from 100. If the absolute shares add up to more than 99, then they are reduced proportionately to total only 99. The remainder is split between the inqueue relative users based on relative shares. For example if a guest HPO has an absolute share of 40% and there are 12 other users inqueue, all with relative shares of 100 then the total relative share is 1200. This value is used by allocating the remaining share of 60% equally to the 12 users (5% normalized share to each user). Given the same HPO having a 40% absolute share, if one additional user with a relative share of 600 is put on the dispatch list, the previous user's normalized share will drop to 3.3% (60% * (100 / 1800)), and the new user's normalized share will be 20%.

The reason for using absolute shares instead of relative shares for service machines is based on the fact that relative shares drop when more users logon and absolute shares increase as compared to other users when more users logon. If more users logon to a system, you would want VTAM to get a proportionally higher share because VTAM now has more work to accomplish.

Absolute Share Considerations:

It is possible to set shares of users too low. Consider a situation where 10 users are in queue with a relative share of 100 and VTAM is in queue with an absolute share of 5%. VTAM's actual share is 5% as compared to the other users with actual shares of 9.5%. Another way to look at this is that VTAM's 5% absolute share is equivalent to a relative share of 53. When the load grows such that VTAM requires more CPU than 5%, VTAM will become a limiter to the system. The total relative shares and total absolute shares of the inqueue users are reported in ESASUM as SRMRELDL and SRMABSDL. These values can be used for computing equivalent relative and absolute values.

Absolute shares are recommended for ALL service machines. Service machines CANNOT afford to wait for CPU as this will artificially decrease the multi-programming level and increase end users' response time. If 30 inqueue users (with an average relative share of 100 each) is normal then setting a high impact server to 3000 will guarantee the server one out of two available minor time slices when needed. Setting shares higher than 3000 may dilute the impact of reducing shares of other users, i.e. batch users. If one user has a relative share of 10,000 (the maximum) and two other users have shares of 50 and 100, the two users will have actual shares of .5% and 1%. If instead, the large user has a relative share of 3,000 then the two users will have actual shares of 1.6% and 3.2%. Installations may find that large absolute differences between interactive and batch users is more effective in promoting interactive response time.

Setting Maximum Shares:

In VM/ESA 1.2.2, an enhancement to the SET SHARE command was made to provide the ability to place a limit on the amount of CPU that can be consumed by a user. Prior to this release, the share value assigned to a user was a minimum target of CPU only. If no other user needed resources, the highest share user would always get more CPU than the user's share. Now two types of limits are available. A soft limit specifies that the subject users are to receive no additional resources if any other non-limited user can consume them. A hard limit puts an unconditional cap on the user's ability to consume CPU cycles regardless of other demand (or lack thereof). It is both possible and reasonable to assign general users a relative target share and an absolute limit share.

Summary:

1 - Use ABS SHARE for important IBM service machines (like TCPIP) and potentially for important servers (such as a

Linux machine that is doing online transactions). Verify expectations using ESAUSRC (SHARE configuration),

ESAXACT (will show if a server is waiting on CPU) and ESAUSP2 (shows user utilization

and how a server is doing compared to others)

2 - When setting ABS SHARE for a machine, if it does not use its allocation, the CPU will be freed up for others to use.

3 - Use caution when setting hard/soft limits.

Determining what SHAREs are set:

Use ESAUSRC and ESASRVC to review share settings for users and special users/servers. ESAUSRC shown below.

Verify that the correct machines have the correct SHARE settings/limits. Remember if a machine has more than one vCPU, the SHARE is split equally between each.

Therefore if a machine has 5 vCPUs, the SHARE becomes REL 20 - it needs to adjust to REL 500 so each vCPU gets REL 100.

Measuring Effects of SHARE settings:

CPU Wait on the ESAXACT screen/report should drop for users with high shares.

User CPU utilization on ESAUSP2 will show how the server(s) in question are doing compared to the rest of the users.

Back to top of page

Back to Performance Tuning Guide