Resource Pool Definition and Analysis (was previously known as CPU pool)

Resource Pooling is a way to define, balance and limit the amount of processor capacity a group of users/servers

(also known as guests) is allowed to use.

Setting SHARE values can limit an individual guest but resource pooling

allows restrictions by group.

For example, there might be a test group, a development group, a QA group and

a production group.

If the production group needs more capacity, can lower the cap on the test group and raise

the cap on the production group.

It can also assist when using the IBM License Metric Tool (ILMT) tool for

subcapacity licensing/pricing.

Things to keep in mind:

- Pools are defined by type of processor, CP or IFL, with the default being the primary core type of the partition.

- To schedule (assign/remove) a guest to a resource pool,

- The type of CPU must match the guest's primary CPU type

- CPU affinity must be on in the directory for that guest.

- A guest can only be in one pool at a time.

- If a guest has a SHARE LIMITHARD setting, both the individual and the group setting will apply.

- Using LIMITHARD - percentage of system CPU resources

- When a processor is varied onto the system, the CPU resource of the pool is automatically adjusted accordingly.

- A logical processor dedicated to a guest is ignored.

- CP assumes each logical core (CPU) can run 100% busy - regardless of the LPAR's entitlement

- A LIMITHARD setting of 100% is equivalent to CPU NOLIMIT.

- The LIMITHARD setting does not qualify for sub-capacity (IBM ILMT) licensing.

- Using CAPACITY - number of CPU's worth of processing power

- When a processor is varied onto the system, the amount of CPU resource for the pool only changes if the capacity limit is more than the amount of current CPU resource capacity for that system.

- The CAPACITY setting is recognized for sub-capacity (IBM ILMT) licensing.

- If the CPU affinity for a pool is IFL and the last IFL core is varied off the system, the affinity will toggle to CPU affinity suppressed.

- If using SMT (Simultaneous MultiThreading), performance of resource pools may be affected.

- If using SSI, each SSI cluster should have compatible pools (name/type, but not limits) for user relocation.

- Since resource pool definitions do not survive an IPL, pool definitions should be in AUTOLOG1 (or COMMAND DEFINE

statement in AUTOLOG1's directory entry) and the guest should have a

COMMAND SCHEDULE statement in their directory entry! - If using z/PRO, Resource pool definitions can be scheduled to change based on workload - such as allowing a batch resource pool to have a higher limit at night then set back to a lower limit during the day. See Chapter 14 of the zPRO Administrator Guide.

- If using zALERT, alerts can be set up for the success/failure of the resource pool definition commands and/or for the amount of CPU being used by the pools (such as setting an alert for the batch pool hitting a certain level.)

Helpful system commands:

- DEFINE RESPool: Use to add a new resource pool (does not survive an IPL)

>>--DEFine--+-RESPool-+--poolname-------------------------------------------->

+-CPUPool-+

(2)

+-CPU--NOLIMit--TYPE--------------------------------------------+

>--+---------------------------------------------------------------+--------->

+-+-----------------------------------------------------------+-+

| (2) |

| +-------------------+ |

+-+-+-----+--+-LIMITHard--cpulim-+-+--+-------------------+-+

| +-CPU-+ +-CAPacity--cpucap--+ | +-+---------------+-+

+-CPU--NOLIMIT-------------------+ +-TYPE--+-CP--+-+

+-IFL-+

+-STORage--NOLIMit-+

>--+------------------+-----------------------------------------------------><

Example - DEF RESP lnxtest CPU LIMITH 20% TYPE ifl -

(creates a "Linux test" IFL resource pool with a hard limit of 20%)Response - Resource pool LNXTEST is created

- SET RESPool: Use to change the CPU or storage limit settings

(1) +-CPU--NOLIMit-------------------+

>>--Set--+-RESPool-+--poolname------+--------------------------------+------->

+-CPUPool-+ +-+-----+--+-LIMITHard--cpulim-+-+

+-CPU-+ +-CAPacity--cpucap--+

+-STORage--NOLIMit-+

>--+------------------+-----------------------------------------------------><

Example - SET RESP lnxtest CPU CAP 1 -

(updates the "Linux test" resource pool to have a cap of 1.00 of an engine) Response - Resource pool LNXTEST is changed

- SCHedule: Use to assign/remove a user to/from a defined resource pool

>>--SCHedule--+------+--+-userid-+--+-+--------+--+------+--poolname-+------><

+-USER-+ +-*------+ | +-WITHIN-+ +-POOL-+ |

+-NOPOOL-------------------------+

Example - SCH USER linuxt1 POOL lnxtest -

(adds user linuxt1 to pool lnxtest) Response - User LINUXT1 has been added to resource pool LNXTEST

- Query RESPool: Use to display information about defined resource pool(s)

+-ALL-----------------------------+

>>--Query--+-RESPool-+--+---------------------------------+-----------------><

+-CPUPool-+ +-+------+--poolname--+---------+-+

| +-POOL-+ +-MEMBERS-+ |

+-USER--+-userid-+----------------+

+-*------+

Example - Q RESP POOL lnxtest -

(displays information about lnxtest resource pool)Response -

Pool Name CPU Type Storage Trim Members LNXTEST 1.00 Cores IFL NoLimit ---- 1

Helpful ESAMON screens/ESAMAP reports (further explained below):

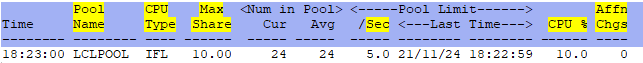

- ESAPOOL - CPU Pool Utilization - This shows information and utilization for CPU pools.

- ESAUSP2 - User Percent Utilization - This shows utilization information for a user or class.

- ESAXACT - Transaction Delay Analysis - This shows an analysis of virtual machine states and wait states.

ESAPOOL - This shows information and utilization for any defined resource pools. Helpful Resource Pool information:

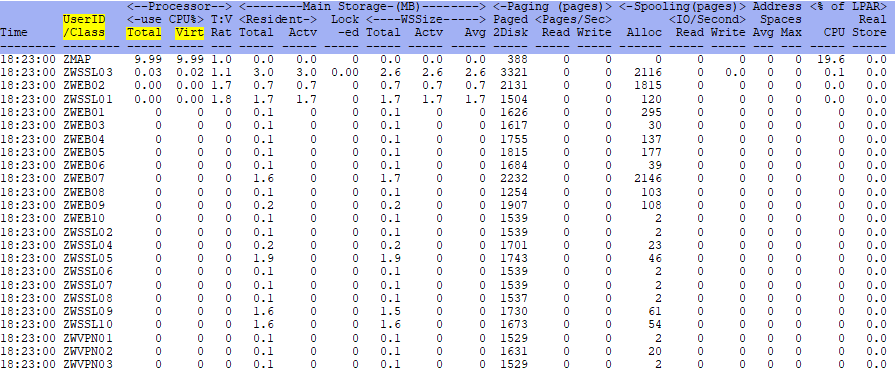

ESAUSP2 - This shows utilization information for a user or class. When clicking on the poolname (zVIEW) or hitting PF2 on z/VM while in ESAPOOL,

ESAUSP2 will show all the users in that resource pool and their utilization. Helpful Resource Pool information:

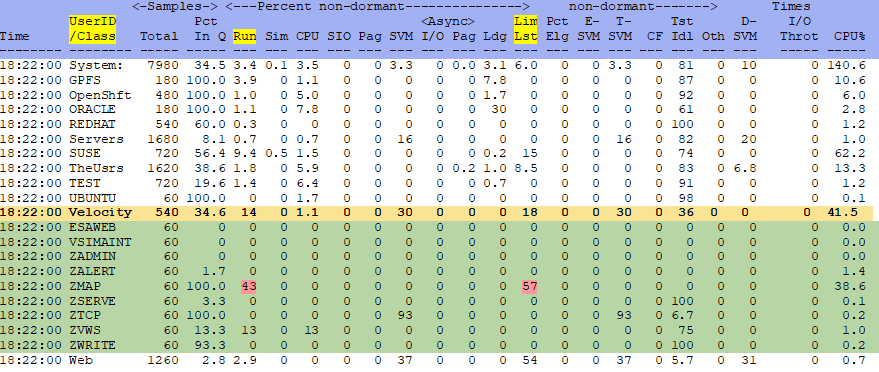

ESAXACT - This shows virtual machine state and wait state analysis information. Helpful Resource Pool information:

Example of using RESPOOLs - Allow batch processing more power at night but limit it during the day

- DEFINE RESPOOL batch CPU LIMITH 20% TYPE ifl - create a batch pool with a small limit.

- DEFINE RESPOOL prodonl CPU LIMITH 80% TYPE ifl - create a production online pool with a high limit.

- SET RESPOOL batch CPU LIMITH 80% - change the limit for the batch pool at 8pm to a higher limit.

- SET RESPOOL batch CPU LIMITH 20% - change the limit back at 6am when the production onlines need the power.

- Set these two commands up in the zPRO scheduler at the appropriate times so it is automatic.

- Use ESAPOOL/ESAUSP2/ESAXACT to verify the changes caused the expected results.

Conclusions:

Resource Pooling is a good way to manage the amount of CPU processing power used by groups of guests - both monetarily and from a workload perspective. It can also prevent over consumption by guests that have "aggressive' workloads for a short period of time or looping guests.

Back to top of page

Back to Performance Tuning Guide